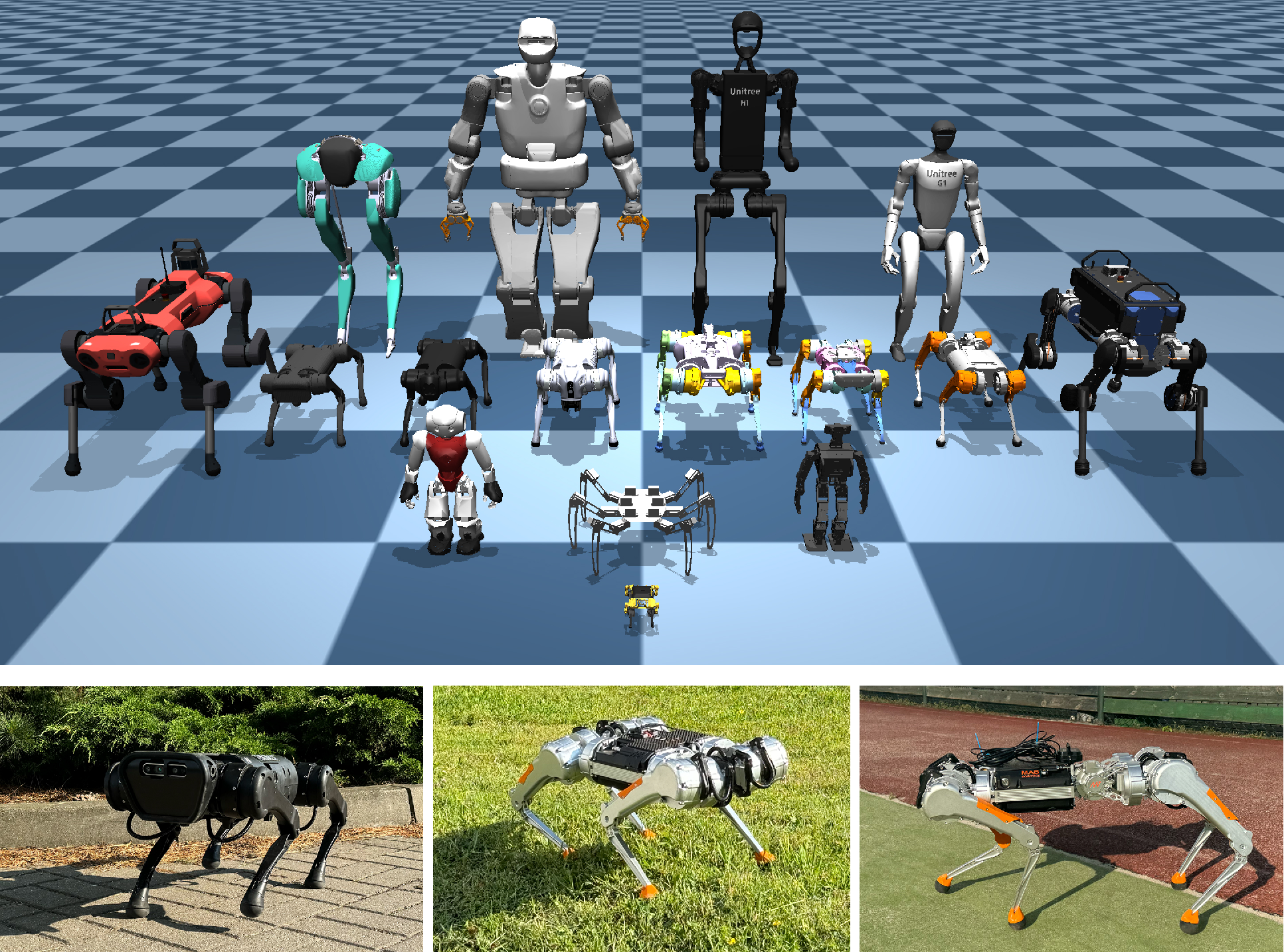

Do you need a new locomotion policy for every new robot? No! We can train a single general locomotion policy for any legged robot embodiment and morphology!

Unified Robot Morphology Architecture

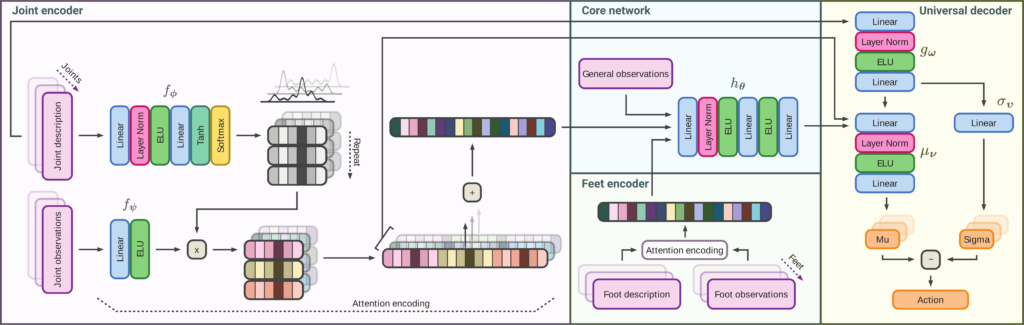

Our Unified Robot Morphology Architecture can handle any robot morphology. To achieve this, we design our network using three main components:

- A simple attention encoder for joint and feet information

- A core network, which learns the meta locomotion gait

- A universal decoder, generation actions for each joint

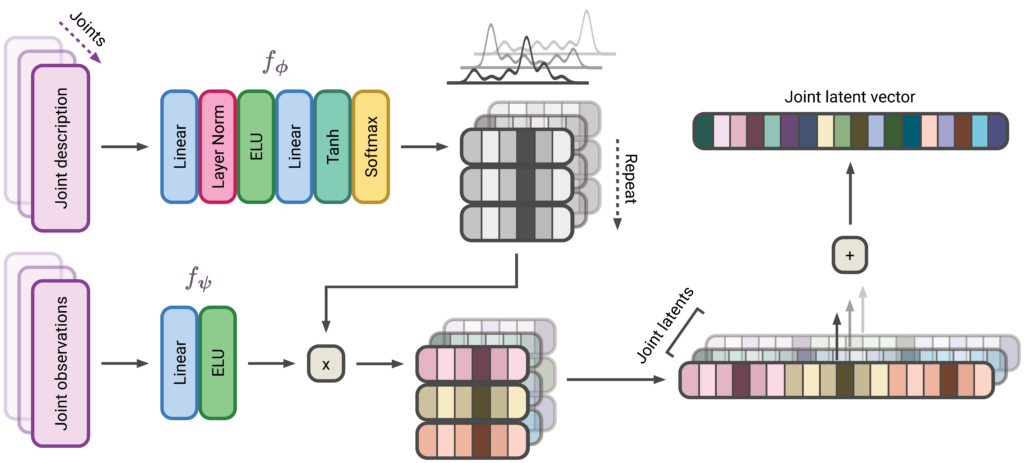

The URMA encoder

To handle observations of any morphology, URMA splits observations into robot-specific and general parts. Robot-specific observations are joint (and foot) observations. They have the same structure but vary in their number depending on the robot. We can only use fixed-length vectors in neural networks, so we need a mechanism that can take any joint observation and route it into a latent vector that holds the information of all joints. Similar joints from different robots should map to similar regions in the latent vector.

URMA uses a simple attention encoder where joint description vectors act as the keys and the joint observations as the values in the attention mechanism:

The same attention encoding is used for the foot observations

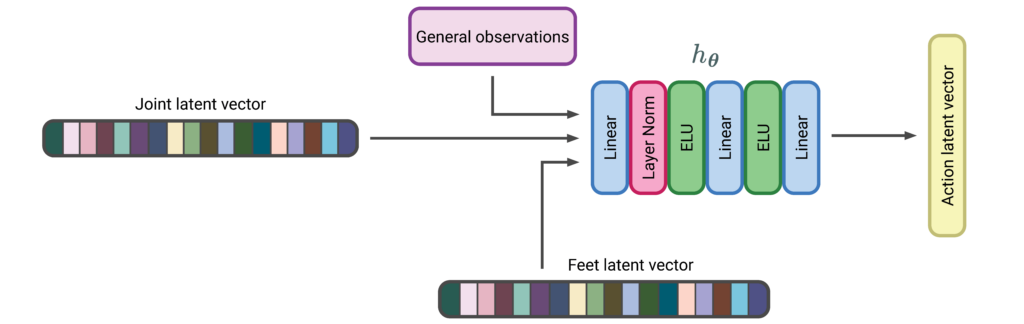

The Core network

The resulting joint and feet latent vectors are concatenated with the general observations and passed to the policy’s core network. The output of the network is the action latent network that represents a meta locomotion action, and it’s ready to be decoded into an action for the specific embodiment!

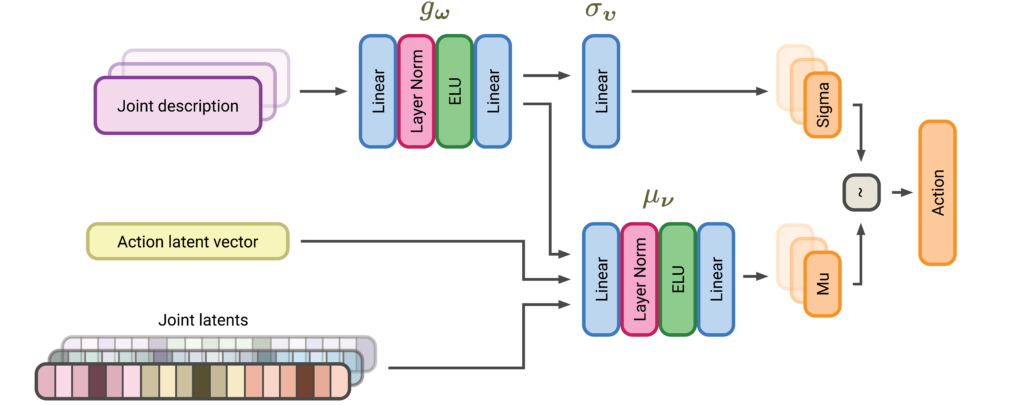

The Universal decoder

Finally, we use our universal morphology decoder, which takes the output of the core network and pairs it with the batch of joint descriptions and single joint latents to produce the final action for every given joint.

Results

Our URMA policy outperforms classic multi-task RL approaches, showing strong robustness and zero-shot capabilities, both in simulation and in the real world!

Results in simulation

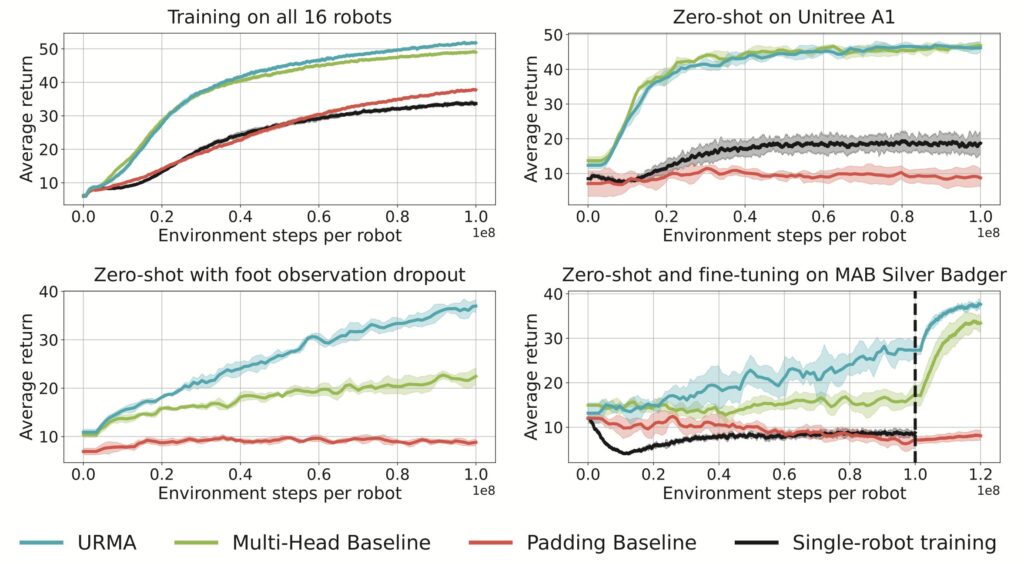

We compared our approach against the following baseline:

- Single robot baseline: we train a separate network from scratch for each robot

- Padding baseline: this approach uses a single network, padding the input and the output of the network to support the maximum embodiment input/output size

- Multi-head baseline: The typical multitask learning architecture, with encoders and decoders learned per embodiment, but using a shared core network

During training, the URMA architecture achieves higher final performances w.r.t. all other baselines. However, URMA shines in the case of zero-shot deployment and adaptation:

- If the robot embodiment is similar to other embodiments in the training (Unitree A1), the zero-shot transfer works seamlessly

- URMA zero-shot transfer works well even if we remove some observations, for example, if we train with foot contact information and we hide it during deployment

- If the robot embodiment is out of distribution, e.g., we try to deploy in our MAB Silver badger robot, we get a bigger performance drop, but we still outperform the baselines in terms of performance.

- If we perform fine-tuning in the MAB Silver Badger robot, we achieve better final performance w.r.t. all the baselines.

Results in the real world

URMA can be easily deployed in the real world! After simulation training, the policy is deployed on two quadruped robots from the training set in the real world. Extensive domain randomization during training allows the policy to transfer directly to real robots without any further adaptation.

However, thanks to the wide variety of robots in the training set, the randomization of their properties, and the morphology-agnostic URMA architecture, the policy can also generalize to new robots never seen during the training process!

More information

If you are interested in URMA, you can take a look at

- Our CORL 2024 paper: https://openreview.net/pdf?id=PbQOZntuXO

- The paper website: https://nico-bohlinger.github.io/one_policy_to_run_them_all_website/

The project website includes a cool demo of URMA directly embedded in your browser!