Navigation is challenging for quadruped robots. This is even more problematic when using small robots, where we have to mount cheap sensors due to payload and cost reasons.

The problem gets even more challenging if we are using policies learned with Reinforcement Learning: these policies are robust, but may cause jerky movements, causing localization loss.

As perception is a fundamental component of INTENTION, we decided to tackle this problem, in collaboration with Prof. Matteo Luperto at Università Degli Studi di Milano and with our visiting scholar, Dyuman Aditya.

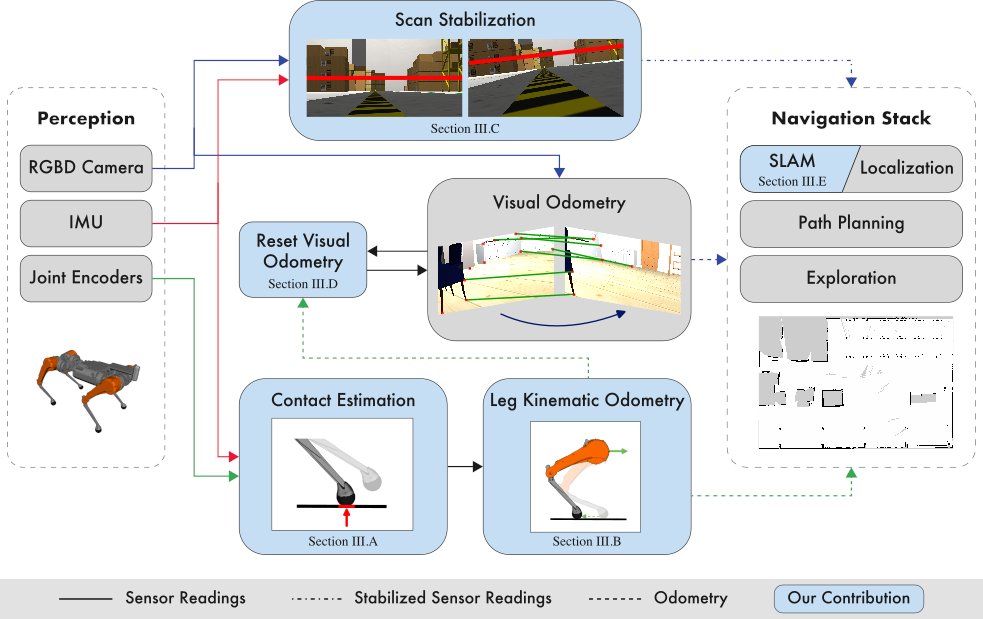

Our system leverages data from low-cost sensors—including an RGB-D camera, an IMU, and joint encoders—augmented by scan stabilization and leg odometry modules to achieve accurate localization and mapping. These modules are essential for quadruped robots equipped with reactive, high-speed controllers to effectively navigate complex 2D environments.

The LO module requires contact sensors mounted on the legs of the robot. However, for platforms lacking these sensors, we provide a dedicated contact estimation module.

Results

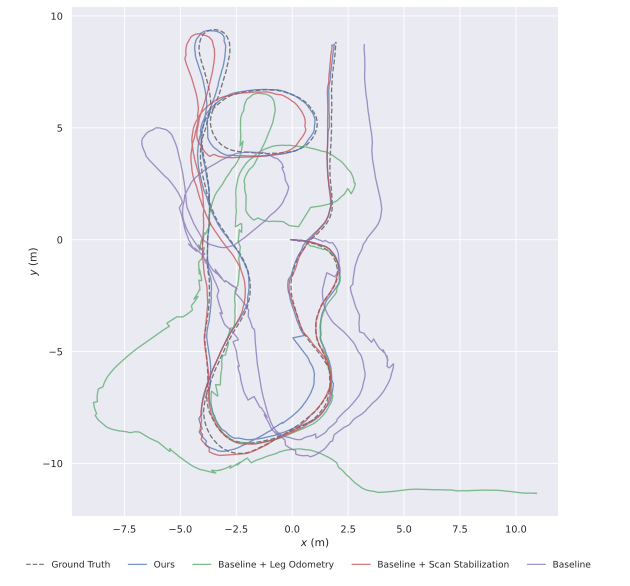

Our results show good performance in terms of localization, mapping, navigation, and exploration in simulated environments.

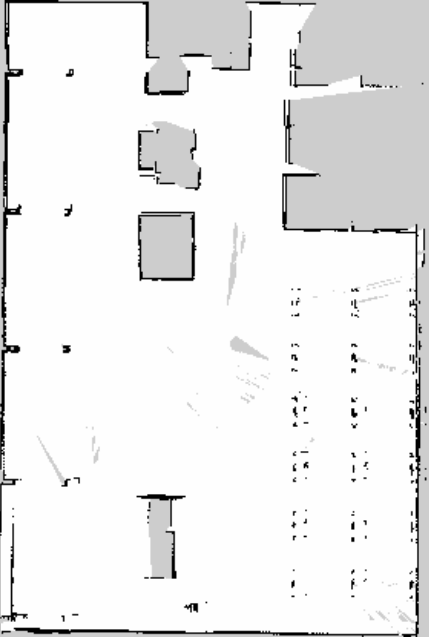

We also validated our mapping results in the real world, including extremely cluttered environments such as the lab at PUT:

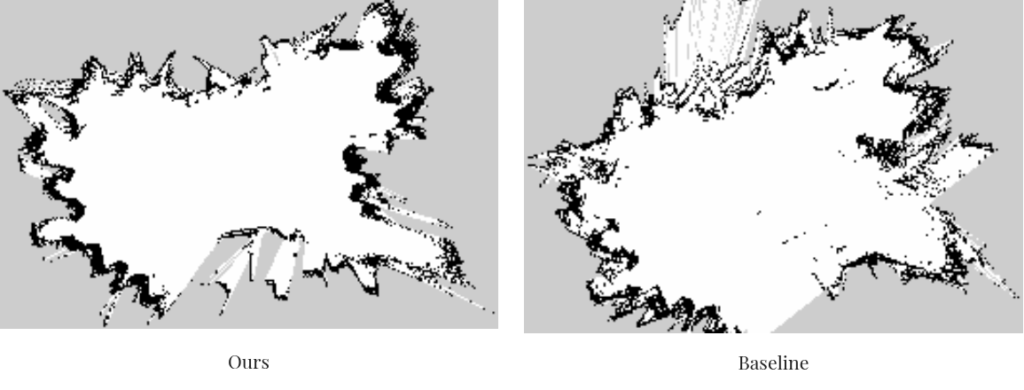

And we were still able to achieve good mapping results, particularly if compared with the baselines:

Our system allows quadruped robots to explore autonomously complex environment withouth too many issues:

More info

If you are interested in knowing more about this project, take a look at

- Our ECMR 2025 paper: https://arxiv.org/abs/2505.02272

- Our project website: https://sites.google.com/view/low-cost-quadruped-slam

- Our open source stack: https://github.com/dyumanaditya/quad-stack